Dependent Types and the Art of HTTP Headers

Introduction

A few years ago, Amos (AKA fasterthanlime) wrote a really great article entitled Aiming for correctness with types. It's a lengthy post, but at heart it was about how, by more faithfully modeling our world at the type level, we can avoid a great number of runtime bugs & errors (a topic near to my heart). He used as a running example the problem of building an authenticating proxy for a web service. In particular, he dug in to modeling HTTP headers, calling out the Rust hyper crate as a particularly expressive model; one in which "you can only build something that's meaningful".

This is also known as "correctness by construction": if you can build an instance of your type, if the constructor returns successfully, then you know that that instance satisfies certain properties (as an aside, I loathe the "create-then-init" idiom that was at one point, and is perhaps still for all I know, common among Java programmers). Providing for this becomes interesting when it comes to HTTP header names because, while they are commonly modeled (e.g. here, here, here & here) as strings, in fact the salient RFCs restrict them to a fairly small subset of ASCII. Furthermore, the RFCs state that they are case-insensitive: "Host", "host", and "HoSt" should all be considered as the same header.

hyper, quite sensibly, provides for creating instances of their HeaderName from strings or buffers of byte. An arbitrary Rust string, which is just a byte string that is guaranteed to be a valid UTF-8 encoding of text, cannot, by virtue of its type, be known to be a valid header name (to say nothing of an arbitrary array of byte). The constructors therefore validate their input, either panicking or returning an error result if the input does not meet their preconditions. In this way, type HeaderName is indeed correct by construction: if you successfully construct an instance, you are guaranteed that it's valid. Well & good.

And yet. If, at compile time, I wish to construct a HeaderName from the string "Host", I know that that is legitimate– why do I need to accept a possible panic or unwrap an error code? Yes, hyper offers a pre-defined header for "Host", since it's standard, but replace that with "X-My-Custom-Thing" (yes, yes, I'm aware of RFC 6648, this is just for the sake of argument). What if I could encode the preconditions into the type, and make the attempt to construct an invalid header name like "名字" into a compile-time error?

Enter dependent types. I introduced them in my posts on the lambda cube, albeit in a fairly abstract, formal way. In this post, I return to them, hopefully in a more practical, down-to-earth manner, and show how they can help us get closer to correctness with types.

In the next section, I'll discuss dependent types with a lot less type theory. Then I'll look at how to "reason" at the type level. Then, we'll look at modeling HTTP header names in Idris, a functional dependently-typed language I'm learning.

Dependent Types

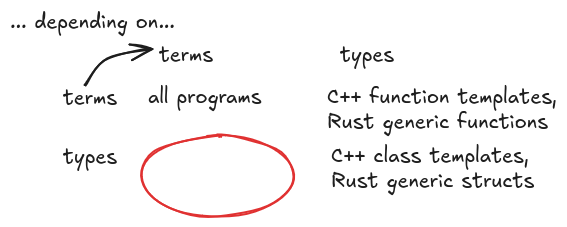

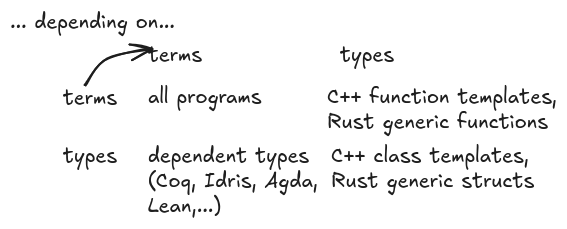

Dependent types are not well-known in the software development world because languages in common use in industry don't offer them. I'm going to describe them by relating them to things with which we're already familiar: terms and types. Terms are expressions in our language, and types serve to categorize them. Since we combine terms to compute, we might say that all of our programs, regardless of language, are made up of terms that depend on other terms.

int f(int x) { return x * 2; // ----- // 👆 this is a term; its type is `int` }

Now consider the following C++ function template:

template <typename T> T f(T x);

f isn't a term that we can use in our program, not directly. We can only invoke f once we supply a template parameter, say int. f<int>() is now a function that we can invoke; we can take its address and generally make use of it in our program– it is a term. We might regard the function template f as a function from types to terms, and in that way we can say that f<int>() is a term that depends upon a type.

Now consider the Rust Vec struct:

struct Vec<T>

Here again, T is a generic type parameter to Vec; I can't construct a Vec, only a Vec<usize> or a Vec<f64>, or whatever. We might regard the generic struct Vec as a function from type to type, and in that way we can say that Vec<usize> is a type that depends upon a type (I handed Vec a type, usize in this case, and received a type in return: Vec<usize>).

This all leaves one more possible dependency between types & terms:

Dependent types occupy that final slot:

Dependent types can be viewed as indexed type families, written as a mapping \((x:A)\rightarrow B_x\) where \(A\) is a type, \(x\) is an element in \(A\), and \(B_x\) is a type that depends upon, or is indexed by the value \(x\). We say that \(B_x\) is the type "at \(x\)".

Let's look at a few concrete examples to fix ideas. Here's the simplest dependent type I can think of: a function that takes a bool and returns a type. Of course, we can't actually write such a function in C, but I'll write it in C-like pseudo-code:

f(bool x) -> type { if (x) return int; else return string; }

\(f\) is a function from \(bool\) (the "index type") into the type of all types. We write \(f: bool\rightarrow type\). Under \(f\), \(int\) is the type at \(true\) and \(string\) is the type at \(false\).

Dependent types can exist at two "levels": the above, in which we have a mapping from a type (\(bool\), in this case) into the type of all types. We can also go one level "down", from the index type into the set of types determined by the source type:

g(bool x) -> f(x) { if (x) return 11; else return "Hello, world!"; }

Here, the type-checker can "compute" the return value of \(g\) from \(x\), and "see" that this implementation type-checks.

In his talk Dependent Types for Practical Use, Andre Videla had a nice summary of our situation:

| C | terms depend on terms | First class pointers |

| Java (generics) | terms depend on types | First class objects |

| Haskell (type families) | types depend on types | First class functions |

| Idris | types depend on terms | First class types |

Previously, I wrote about dependent types in a post on the type system λ-P. There, they were described as "Π-types" that generalized the arrow types with which we're already familiar as a way of representing functions. A function from bool to int would be said to have type \(bool\rightarrow int\). We generalize this by allowing the value on the left-hand side of the arrow to affect the value on the right (as in f(), above).

In that post, I used the example of \(\mathbb{V}_n\), the set of (double-precision) lists of length \(n\). In the λ calculus, a term representing this indexed type family would be \(\lambda n.\mathbb{V}_n\): given an \(n\in \mathbb{N}\), you get back the type \(\mathbb{V}_n\). This is just like f(x), above: given a \(b\in bool\), you get back the type \(f(b)\).

We might imagine a function that, given \(n\in \mathbb{N}\) returns the zero vector for the type \(\mathbb{V}_n\), call it zero(n). Then the type of zero(n) would be \(\Pi n:\mathbb{N}.\mathbb{V}_n\). This is analagous to \(g\), above: \(g\) would have, in λ-P, type \(\Pi \enspace b:bool.f(b)\).

The relationship between \(\mathbb{V}_n\) and zero(n) is shown here:

where ∗ is the type of all types, and \(\square\) represents the universe above \(\ast\).

Idris

For the rest of this post, I'm going to write in Idris, a dependently-typed functional language. I hope to sketch enough of the Idris syntax here to allow the reader to follow the rest of the post.

There are a few ways to define new types in Idris, but the workhorse is this:

data Foo : arguments -> Type where -- 👈 type constructor ctor1 : arguments -> Foo... -- 👈 data constructor ctor2 : arguments -> Foo... -- 👈 data constructor ... -- ...

Here, Foo is the name of our type (or, more generally, our type family). Everything after the colon in the first line is the "type constructor"; here is where we define the indices of our type family (if any).

ctor1, ctor2, and so forth are the "data constructors"– these are the rules for constructing actual instances of our type (family). Each data constructor will take zero or more arguments and result in an instance of our type (or one of our types, if we're defining a type family).

The simplest example I can think of is:

data Bool' : Type where False' : Bool' True' : Bool'

This just asserts that there exists a type Bool' (there's already a type Bool, so I named mine so as not to conflict with it) with two constructors (False' & True'). The constructors take no parameters, so they can be regarded as constants. In other words, this type has exactly two members. Actually, I'm pretty-sure that you can't use ' in a name in Idris, but I hope the intent is clear.

A simple "record type" representing a user with a first name, last name & age might be:

data User : Type where MkUser : String -> String -> Int -> User

This asserts that User is a type & provides one way to construct instances. That constructor requires two strings & an int before it returns a User instance.

One would construct an instance like so:

let x = MkUser "John" "Doe" 44 in printLn $ "I have a user " ++ show x

This isn't terribly convenient, in that we'll have to provide accessors for each field, we have to remember that the first string is the first name, the second string the surname &c. Idris provides a more ergonomic way of defining records, but that won't concern us here.

The reader will note that function invocation in Idris (functional programmers speak of "applying" a function to an argument, or "function application") is denoted by simply writing the function & its arguments next to one another, possibly with parentheses to indicate precedence, if needed.

A slightly more interesting example is the unsigned integers:

data Nat' : Type where zed : Nat' succ : Nat' -> Nat'

Again, there's already a type Nat, so I named mine differently, so as not to conflict.

This is a recursive type, because it's defined in terms of itself. We are stating that Nat' is a type, and that there are two ways of producing elements of Nat':

- there is the constant

zed; i.e. the termzedis an element of the typeNat' - the function

succ(this is an abbreviation of "successor"): in this case, given another inhabitant of typeNat', call itn,(succ n)is also inNat'

So what does this type look like? Well, starting from nothing, all we have is the constant zed, so we know that Nat' looks like: {zed, ...maybe more stuff}.

OK: now that we have a Nat' in hand, we can use the successor function to give ourselves another one: succ zed. But we can then apply succ to that, to get another member of type Nat': succ (succ zed). And so on.

In other words, Nat' consists of an infinite series of applications of the successor function: {zed, succ zed, succ (succ zed), succ (succ (succ zed)), ...}. You may recognize this as the Peano numbers.

This may look surprising if you're used to the more traditional representation of the naturals in industrial languages, but as we'll see below it's a surprisingly handy representation for other purposes.

Propositions as Types

Examples of things we can represent with dependent types include:

- length-indexed lists that allow us to write a SAXPY operation where passing vectors of different lists is a compile-time error

- writing a "printf" replacement where the type & number of subsequent arguments is computed at compile-time based on the format string, turning a failure to supply the correct number & type of replacement parameters into a compile-time error (my first segv as a newbie programmer was exactly such an error in using printf)

- writing type-safe heterogeneous lists

- writing functions that take as an argument a value describing a table schema, whose return type is calculated from that schema

Here, however, I'd like to demonstrate a different way to make use of dependent types. Let's take a look at the following Idris type:

data Even : Nat -> Type where ZedIsEven : Even Z Plus2IsEven : Even n -> Even (S (S n))

(Note that I've moved over to the built-in Nat type; it's just like Nat' above, but zed is now Z and \(succ\) is just S). Let's think about this: this is a type family, indexed by \(\mathbb{N}\). In other words, to every \(n\in \mathbb{N}\), there corresponds a type

\(Even \enspace n\). How can we construct elements of these types? Well, we have two data constructors. The first is a constant: \(ZedIsEven\) and it inhabits the type \(Even \enspace Z\).

The second is a function. If we already have an inhabitant the type \(Even \enspace n\) laying around, let's call it \(n\), then \(Plus2IsEven \enspace n\) is an inhabitant of \(Even \enspace (succ \enspace (succ \enspace n))\) (or, of \(Even \enspace (n+2)\)). So we see that we have:

| term | type |

|---|---|

| ZedIsEven | Even 0 |

| Plus2IsEven ZedIsEven | Even 2 |

| Plus2IsEven (Plus2IsEven ZedIsEven) | Even 4 |

| Plus2IsEven… | Even 6 |

and so on.

Alternatively:

| type | members |

|---|---|

| Even 0 | ZedIsEven |

| Even 1 | none– the type is empty |

| Even 2 | Plus2IsEven ZedIsEven |

| Even 3 | none |

| Even 4 | Plus2IsEven (Plus2IsEven ZedIsEven) |

| Even 5 | none |

| … |

In other words, we've constructed a type family, indexed by \(\mathbb{N}\) whose members are inhabited only at the evens. There's just no way to construct an element of type Even 1, or Even 3, and so on.

There's another way to look at this: the type \(Even \enspace n\) can be regarded as a proposition: "\(n\) is even". The indexed type family \(Even\) can be viewed as a predicate over the naturals. Now, propositions can be true or false; "1 is even" is a legitimate proposition, it just happens to be false. Furthermore, if we equate a proposition being true with the corresponding type being inhabited, things line-up neatly for us: we see that the types in \(Even\) that are actually inhabited are just those that correspond to true propositions.

You may have heard the catchphrase "proofs are programs"; by this we mean that we can prove propositions to be true by writing programs that produce terms inhabiting the type corresponding to those propositions.

What can we do with such a thing? We can, dare I say it, leave proofs in our code instead of tests. Let us suppose we have a function; a very simple function, call it h() (since we've already used f() & g()):

h : Nat -> Nat h x = x*2

And suppose we'd like to verify that our function always returns an even number. We could of course write tests in which we apply h() to various inputs & verify that the results are even, but that would only prove that h() returns an even for those particular inputs. Let's try to do a bit better: let's assert that for every natural x, we can find an inhabitant of the type Even (h x). In other words, you give me a \(Nat\) \(x\) and I'll give you a term in \(Even \enspace (h \enspace x)\). Well, that's a function from \(Nat\) to \(Even \enspace (h \enspace x)\):

h_is_even : {x : Nat} -> Even (h x)

Don't worry about the curly braces– they just mark \(x\) as an

implicit argument. That's just a declaration; now we have to provide

an implementation. Functions in Idris (like other functional languages

such as Haskell) are typically defined by pattern-matching on their

arguments. Being a Nat, x can have only two forms: either Z or

S k for some other Nat k:

h_is_even : {x : Nat} -> Even (h x) h_is_even {x = Z} = ?h_is_even_rhs0 h_is_even {x = (S k)} = ?h_is_even_rhs1

The bits beginning with '?' are "holes": expressions to be filled-in later. Idris lets us "sketch-out" our implementation, leaving these holes for terms that we haven't yet figured-out (sort of like the todo!() macro in Rust). Interactively, in the editor, we can ask Idris the type of the first hole, ?h_is_even_rhs0, and Idris will tell us \(Even \enspace 0\). That's because, for this match arm, the argument \(x\) is zero, so we know \(h \enspace x\) will also be zero. There's only one inhabitant of that type (we can even have Idris fill it in for us), so that's one hole down:

h_is_even : {x : Nat} -> Even (h x) h_is_even {x = Z} = ZedIsEven h_is_even {x = (S k)} = ?h_is_even_rhs1

The second case is more interesting. If we ask Idris to search for a term, it will return failure. I mean, we know how it should go: we'll just be applying Plus2IsEven to… well, to whatever the image of k under h_is_even is. When \(x=1\), the term we want is \(Plus2IsEven \enspace ZedIsEven\). When \(x=2\), the term will be \(Plus2IsEven \enspace (Plus2IsEven \enspace ZedIsEven)\), and so on.

If we were working on the logic side of things, we'd be proceeding by induction: the base case would be zero, which we've solved. Now we need the inductive step, which looks like this: given a proof that \((h \enspace k)\) is even, we need to produce a proof that \((h \enspace (k+1))\) is even.

The computational equivalent of induction is recursion. If we know the result for \((h \enspace k)\), then the result for \(x\) is \(Plus2IsEven\) applied to that thing:

h_is_even : {x : Nat} -> Even (h x) h_is_even {x = Z} = ZIsEven h_is_even {x = (S k)} = Plus2IsEven (h_is_even {x=k})

And that's it– no testing needed. We've just left a proof of the property we're asserting lying in our code. If subsequent changes break that property, that will manifest as a compile-time error.

A second application is picking-out a subset of an already-defined type. Let's suppose we want to write a function to halve its input:

half : Nat -> Nat half Z = Z half (S (S k)) = 1 + half k half (S k) = half k

Fine. And yet: it's unsatisfying to truncate odd numbers. Wouldn't it be nicer if we could only define half() over the evens? With propositions, we can:

half2 : (n : Nat) -> Even n -> Nat half2 Z ZIsEven = Z half2 (S (S k)) (Plus2IsEven x) = 1 + half2 k x

half2() takes a second parameter, an element of Even n. Looked at another way, this argument could be viewed as a proof that n is even (if we can come-up with an element of Even n, then we know n is even). And indeed, the type-checker sees this: it doesn't demand that we handle the case half2 (S k).

Alright, but now our callers are forced to provide said proof term whenever they want to invoke our function, like so:

half2 : (n : Nat) -> Even n -> Nat half2 Z ZIsEven = Z half2 (S (S k)) (Plus2IsEven x) = 1 + half2 k x namespace test x : Nat x = half2 4 (Plus2IsEven (Plus2IsEven ZIsEven))

To avoid this, we can leverage Idris' support for auto implicit arguments. By making the proof term both implicit and marking it "auto", Idris will search for a proof:

half3 : (n : Nat) -> {auto prf : IsEven n} -> Nat half3 Z {prf = ZIsEven} = Z half3 (S (S k)) {prf = (Plus2IsEven x)} = 1 + half3 k namespace test x : Nat x = half3 8 failing y : Nat y = half3 7 -- 👈 fails with "Can't find a value of type Even 7"

Proving Equality

Idris uses the same notion as Rust traits, but they're called interfaces here. The interface for expressing equality is \(Eq\), and it defines the operators == and /=:

Eq : Type -> Type (==) : ty -> ty -> Bool (/=) : ty -> ty -> Bool

This is "boolean equality": we compare two terms at runtime & get a boolean back. Now, while it might be surprising, there's nothing actually stopping us from implementing operator == for our type to, say, just return \(True\) all the time, or never. In fact, if you go to the docs for the analagous Rust trait Eq, you'll see that it documents certain behaviors the implementation must provide, and notes "This property cannot be checked by the compiler… Violating this property is a logic error." In other words, Rust programmers just shouldn't do such things.

Idris provides a dependent type, =, that does for the equality relation what \(Even\) did in the last section:

data (=) : a -> b -> Type Refl : x = x

What this means is that = is a type family indexed by two other types. In other words, if you give it two types, it will give you a type in return. There is only one way to produce members of the resulting type family: \(Refl\), which is in type \(x=x\). For instance, \(Refl \enspace 1 \enspace 1\) is a member of \(1=1\), but there is no way to construct an element of type \(1=2\), say.

The reader may wonder why the terms appearing on either side of the = are permitted to be different; we'll get to that, but for now I'll just note that there is a variant that adds that constraint, === (yes, three '=' characters):

public export (===) : (x : a) -> (y : a) -> Type (===) = Equal

This can be found in the standard library, and is documented as "An equality type for when you want to assert that each side of the equality has the same type, but there's not other evidence available to help with unification."

Conceptually, we have:

\begin{align} \label{eq:1} x==y&:&Bool&:&Type \nonumber\\ proof&:&x = y&:&Type \nonumber\\ \end{align}Representing Header Names in Idris

We now turn to a practical application of all this, as well as the article I mentioned at the start of this post: HTTP headers. This is one of those areas, common throughout web services, where something seemingly simple can turn into a deep rabbit hole. Recall that an HTTP/1.1 message has a seemingly straightforward structure:

GET / HTTP/1.1

Host: indieweb.social

User-Agent: curl/8.11.0

Accept: application/activity+json

...

{...}

It's not clear from the above, but lines in HTTP requests are delimited by carriage return/newline pairs. The first line is the request line: verb, URI, and the protocol version (separated by a single space in each case). After that are zero or more lines of headers. Each header consists of a header name, a colon & a space, then the header value. Next comes an empty line, followed by an optional message body.

Focusing on just the headers, we might naively represent header names as strings. Turns out, header names are actually quite constrained, per the sequence of RFCs that have incrementally defined HTTP over the years:

- RFC 822 Standard for ARPA Internet Text Messages, released in 1982

- RFC 2616 Hypertext Transfer Protocol - HTTP/1.1, released in 1999

- RFC 7230 Hypertext Transfer Protocol (HTTP/1.1): Message Syntax and Routing, released in 2014 and revising RFC 2616

- RFC 9110 HTTP Semantics, released in 2022 & revising RFC 7230

- RFC 9112 HTTP/1.1, also released in 2022

To be more precise, header names are strings of certain ASCII characters defined by the following BNF grammar (cf. RFC 5234 for the details on BNF in RFCs):

header name = 1*<any ASCII character except CTLs or separators>

CTL = any US-ASCII control character (octets 0 - 31) and DEL (127)

separators = '(' | ')' | '<' | '>' | '@'

| ',' | ';' | ':' | '\' | '"'

| '/' | '[' | ']' | '?' | '='

| '{' | '}' | SP | HT

In other words, a header name is a non-empty list of printable ASCII characters, except for '(', ')', '<', '>', '@', ',', ';', ':', '\', '"', '/', '[', ']', '?', '=', '{', and '}'. A general-purpose string, which nowadays likely supports arbitrary Unicode code points, is capable of holding all sorts of invalid characters, meaning we wouldn't get correctness-by-construction.

Another complication: header names are interpreted in a case-insensitive manner: again, "Host", "host" and "HoSt" should all be interpreted as the same header name.

Finally, a number of names have been registered and have commonly-expected meanings, so that users of a header name abstraction will likely expect to have an enumerated list of mnemonic identifiers (like HOST, or USER_AGENT) available to spare them having to remember the exact name/casing/hyphenation of a given header.

Rust's hyper crate provides a dedicated struct for representing header names (unsurprisingly named HeaderName) where one can:

- only obtain instances from strings (or buffers of byte) that are syntactically valid

- access a list of constant instances of this type for all registered header names

Case insensitivity is also handled:

- the salient constructor maps the input so as to transform illegal values to zeroes, and values corresponding to ASCII upper case to lower

- if the resulting buffer of byte contains any zeroes, fail

- else if the resulting buffer of byte maps to a registered name, return a copy of the corresponding instance

- else return a new instance using that name

I know all this because while working in Idris, I needed a header name abstraction for myself & turned to hyper for inspiration. There are libraries out there for Idris (here & here, e.g.) but I wanted to see if I could do something about step 2), above: if I'm instantiating a header name in a way I know cannot fail, why should I have to handle the error case?

// Arrrrgghghhhhhh 👇

let host = HeaderName::from_str("Host").expect();

What I wanted was something like:

let host = MkHeaderName "Host" bad = MkHeaderName "Bad(In Many Ways)" -- Compile-time error 👆 in ...

I suspect newcomers to dependent types such as myself have a tendency to over-use them. This is my first foray, so perhaps I have. Nevertheless, as Edwin Brady (the creator of Idris) notes, one learns a lot in the attempt.

First Steps

Let's start by modeling "Host", "Content-Length" and custom header names. That should be enough to prove-out any approach and easy to extend with other registered names as needed. The obvious move would be:

export data HeaderName = Host | ContentLength | CustomHeader ?thing

The question is: what should go into that hole? A start might be:

export record CustomHeaderName where constructor MkCustomHeaderName value : List1 Bits8

Bits8 is the Idris type representing a byte. As an aside, we're already encoding syntax into our type: List1 is a List that's guaranteed to be non-empty. But what about encoding our rules into value? It can't be any list of byte, but only bytes holding valid ASCII values (i.e. printable, not a separator).

In Idris, we could encode that into our type by adding a member to our type: a member whose type corresponds to the proposition that the bytes in value are legit. The value of this member would be a proof term for that proposition:

export record CustomHeaderName where constructor MkCustomHeaderName value : List1 Bits8 prf: Token value

(I chose the name "Token" because the salient RFCs use it for the grammar element corresponding to header names). But what would Token look like, exactly? Well, from the perspective of logic, it's a predicate over non-empty lists of byte. That means, computationally, it's a function from List1 Bits8 to Type:

||| An assertion that all octets in a List1 of Bits8 are token ||| constituents public export Token : List1 Bits8 -> Type

Now, the condition of any one byte being a token constituent is easily wrapped-up in a function (from Bits8 to Bool), and we want to assert "this function returns true for every element of a list". The standard library provides something like this– All:

data All : (0 p : a -> Type) -> List a -> Type where Nil : All p Nil (::) : {0 xs : List a} -> p x -> All p xs -> All p (x :: xs)

Surprised that this didn't exist for List1, I quickly adapted it:

||| The `All` predicate adapted to `List1` public export data All1 : (0 p: a -> Type) -> List1 a -> Type where All1_head : {0 x : a} -> p x -> All1 p (x ::: []) All1_tail : {0 xs : List a} -> p x -> All p xs -> All1 p (x ::: xs)

Given a suitable function on bytes:

||| Return True if the given byte contains the ASCII code for a token ||| constituent public export isTokenByte : Bits8 -> Bool

we can defined Token like so:

||| An assertion that all octets in a List1 of Bits8 are token ||| constituents public export Token : List1 Bits8 -> Type Token = All1 (\b => isTokenByte b === True)

Now, in order to construct an instance of CustomHeaderName the caller has to provide not only the bytes that make-up the name, but a proof that they're valid. What would such a thing look like? Well, we could build one representing "Host" like so:

host : CustomHeaderName host = MkCustomHeaderName (72 ::: [111, 115, 116]) (All1_tail Refl (Refl :: [Refl, Refl]))

Cool! We have a "correct-by-construction-at-compile-time" header name. But… yeesh: not exactly "ergonomic" as the Rust folks say. Can we improve this a bit? We can! Idris offers the ability to make members as well as function parameters "auto-implicit", meaning that they are required, but that the compiler will automatically search for them:

export record CustomHeaderName where constructor MkCustomHeaderName value : List1 Bits8 {auto prf: Token value} host : CustomHeaderName host = MkCustomHeaderName (72 ::: [111, 115, 116])

As an aside, this strategy of getting out of having to handling errors by demanding that our callers "prove" their arguments are legit so as to make our function total was nicely discussed in the post Type Safety Back and Forth.

HeaderName

The reader will note that HeaderName and CustomHeaderName are marked "export". This means that the types will be visible outside this module, but none of the data constructors will. So while consumers of this module can use them, they can't actually create them. That's desirable because I haven't quite expressed my desired semantics in my types. Within the module, one could, for instance, do this:

namespace test bad : HeaderName bad = Custom $ MkCustomHeaderName (72 ::: [111, 115, 116]) -- bad != Host

In other words, we've wrote a CustomHeaderName with the ASCII representation of "Host" that's separate & distinct from the Host variant of the HeaderName type– not good. I'm still looking for better ways to solve this, but for now I created a public creation function that will enforce this. First, however, we need to take a look at the NonEmpty predicate from the standard library:

||| Proof that a given list is non-empty. public export data NonEmpty : (xs : List a) -> Type where IsNonEmpty : NonEmpty (x :: xs)

Hopefully, this is becoming familiar: we have a function from lists to Type; a predicate on lists, in other words. There's only one way to get an element of this type: the IsNonEmpty data constructor, which can only be a applied to a list that's, well, non-empty.

With that, we can write MkHeaderName:

||| Given a buffer of octets, together with an assertion that the buffer ||| is not empty, and that it's contents are all token constituents, ||| return a `HeaderName` instance. {- I suppose I could have written this in terms of `List1` and disposed of the first implicit auto parameter, but `List` seems more idiomatic (lots of pre-defined predicates & utility functions defined for it, e.g.) and so, hopefully, more ergonomic for consumers of the function. -} public export MkHeaderName : (buf : List Bits8) -> {auto not_nil : NonEmpty buf} -> {auto is_tok : All (\b => isTokenByte b === True) buf} -> HeaderName MkHeaderName buf@(b :: bs) {not_nil = IsNonEmpty} {is_tok = pf_b :: pf_bs} = {- It's a small pity that I can't just convert these to Char & call `pack`; this would allow my match arms to look like "content-length", for instance. However, if I do that, the primitives involved defeat Idris' proof-finding. -} case bufToLower buf of -- 👇 The ASCII representation of "content-length" [99, 111, 110, 116, 101, 110, 116, 45, 108, 101, 110, 103, 116, 104] => ContentLength -- 👇 The ASCII representation of "host" [104, 111, 115, 116] => Host {- Note that this line will *not* lowercase the header name; I think at this point, having verified that it's not equal, modulo ASCII casing, to a standard header, I'd like to preserve the caller's choice of case. -} _ => CustomHeader $ MkCustomHeaderName (b ::: bs)

This will look unfamiliar to the non-Haskell, non-Idris programmer, so let's walk through it. We have a function of three parameters that returns a HeaderName. There is no provision for failure.

That's because the second & third parameters are proof terms for the proposition that the first parameter, a buffer of byte, is 1) non-empty and 2) made-up of valid token constituents. In other words, the caller can't even invoke the function without proving that the input is legit; failure to do so is a compile-time error.

We've spared the caller the hassle of having to build the proof terms by making the second & third parameters auto-implicit; an invocation of this method looks simply like this:

host : HeaderName host = MkHeaderName [72, 111, 115, 116]

To explain the code, I'd like to walk through the process of actually implementing the function. Suppose we begin with just the function's signature in an Emacs buffer in idris-mode:

public export

MkHeaderName : (buf : List Bits8) ->

{auto not_nil : NonEmpty buf} ->

{auto is_tok : All (\b => isTokenByte b === True) buf} ->

HeaderName

Placing point somewhere in "MkHeaderName" and typing "C-cC-s" (idris-add-clause) gives us this:

public export MkHeaderName : (buf : List Bits8) -> {auto not_nil : NonEmpty buf} -> {auto is_tok : All (\b => isTokenByte b === True) buf} -> HeaderName MkHeaderName buf = ?MkHeaderName_rhs

IOW, we've got the explicit parameter (buf) and a hole for the implementation. Now the proof terms are going to be important to that implementation, so let's bring them into scope by manually adding them on the LHS of the '=':

public export MkHeaderName : (buf : List Bits8) -> {auto not_nil : NonEmpty buf} -> {auto is_tok : All (\b => isTokenByte b === True) buf} -> HeaderName MkHeaderName buf {not_nil} {is_tok} = ?MkHeaderName_rhs

Placing point anywhere in "buf" and typing "C-cC-c" (idris-case-dwim) will break-up our implementation according to the various forms buf could take:

public export MkHeaderName : (buf : List Bits8) -> {auto not_nil : NonEmpty buf} -> {auto is_tok : All (\b => isTokenByte b === True) buf} -> HeaderName MkHeaderName [] {not_nil = not_nil} {is_tok = is_tok} = ?MkHeaderName_rhs_0 MkHeaderName (x :: xs) {not_nil = not_nil} {is_tok = is_tok} = ?MkHeaderName_rhs_1

While it's true that buf, by virtue of being a list, could be the empty list, we know we have a proof term that rules that out. Let's explore that a bit: place the cursor in the second "not_nil" and again hit C-cC-c. We get:

public export MkHeaderName : (buf : List Bits8) -> {auto not_nil : NonEmpty buf} -> {auto is_tok : All (\b => isTokenByte b === True) buf} -> HeaderName MkHeaderName [] impossible MkHeaderName (x :: xs) {not_nil = not_nil} {is_tok = is_tok} = ?MkHeaderName_rhs_1

Idris has realized that there's no way to construct a proof term for NonEmpty buf when buf is the empty list and marked that match impossble. It's harmless to leave it in, but let's remove it for succinctness. We now have:

public export MkHeaderName : (buf : List Bits8) -> {auto not_nil : NonEmpty buf} -> {auto is_tok : All (\b => isTokenByte b === True) buf} -> HeaderName MkHeaderName (x :: xs) {not_nil = not_nil} {is_tok = is_tok} = ?MkHeaderName_rhs_1

If we do the same for the non-empty case, we get:

public export MkHeaderName : (buf : List Bits8) -> {auto not_nil : NonEmpty buf} -> {auto is_tok : All (\b => isTokenByte b === True) buf} -> HeaderName MkHeaderName (x :: xs) {not_nil = IsNonEmpty} {is_tok = is_tok} = ?MkHeaderName_rhs_2

Let's rename x :: xs to be consistent with bytes, and do C-cC-c on the second "is_tok":

public export MkHeaderName : (buf : List Bits8) -> {auto not_nil : NonEmpty buf} -> {auto is_tok : All (\b => isTokenByte b === True) buf} -> HeaderName MkHeaderName (b :: bs) {not_nil = IsNonEmpty} {is_tok = (pf_b :: pf_bs)} = ?MkHeaderName_rhs_3

Idris has figured-out on our behalf that the proof term for is_tok must be the second form above: a proof term that b is a token constituent cons'd onto a proof term for everything in bs being proof constituents.

Alright– armed with much more detailed information about our arguments, we proceed to implement this function. The game is pretty simple: if buf, when lowercased, is the ASCII representation of "host", return Host. If it's the ASCII representation of "content-length", return ContentLength. Else, return Custom, for which we'll have to construct a CustomHeaderName instance. Grant me a function bufToLower that will carry out the required transformation and we get:

MkHeaderName buf@(b :: bs) {not_nil = IsNonEmpty} {is_tok = pf_b :: pf_bs} = case bufToLower buf of -- 👇 The ASCII representation of "content-length" [99, 111, 110, 116, 101, 110, 116, 45, 108, 101, 110, 103, 116, 104] => ContentLength -- 👇 The ASCII representation of "host" [104, 111, 115, 116] => Host _ => CustomHeader $ MkCustomHeaderName (b ::: bs) {prf=All1_tail pf_b pf_bs}

Here, I've included the implicit argument to MkCustomHeaderName for clarity. In fact, Idris is smart enough to find it from the context, which is why it's not included in the actual implementation, above.

Conclusion

This post has gotten a bit long, so I think I'm going to stop here. I'll likely look back on this code in a few months & cringe, but I hope that I've at least demonstrated a few practical uses for dependent types in terms of making illegal state unpresentable in our code. Perhaps I'll write a follow-up post or two on extending MkHeaderName to strings, handling the case where we don't know the input is legit, representing header values, and building a dependently-typed header map (as in, the return type of the lookup function depends on the value of the header name argument).

I wrote-up a demo of all that that's available here where you can type-in header name/value pairs & see how they're parsed:

pack run idris-http-headers.ipkg X-Mine OK Host bree.local 名字 三三

Failed to parse header: 名字, 三三

[host: [MkHostname {host = [98, 114, 101, 101, 46, 108, 111, 99, 97, 108], port = Nothing}, MkHostname {host = [108, 111, 99, 97, 108, 104, 111, 115, 116], port = Just 8000}], x-mine: <raw header values>, x-custom: <raw header values>, content-length: [16]]

11/27/24 17:43